The Gaussian Process Latent Autoregressive Model

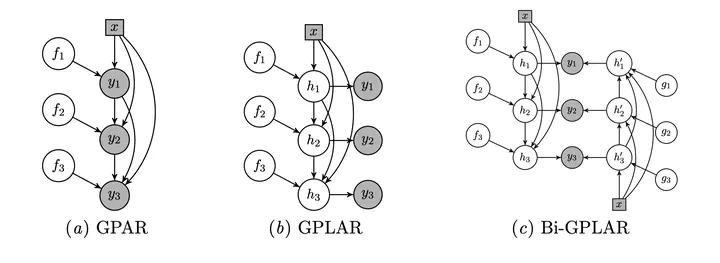

Graphical models for GPAR, GPLAR and bi-GPLAR.

Graphical models for GPAR, GPLAR and bi-GPLAR.Many real-world prediction problems involve modelling the dependencies between multiple different outputs across the input space. Multi-output Gaussian Processes (MOGP) are a particularly important approach to such problems. In this paper, we build on the Gaussian Process Autoregressive Regression (GPAR) model which is one of the best performing MOGP models, but which fails when observation noise is large, when there are missing data, and when non-Gaussian observation models are required. We extend the original GPAR model to handle these settings and provide a variational inference procedure similar to that used in deep Gaussian Processes which replaces the ad hoc denoising approximation used in the original work. We show that the new approach naturally handles noisy outputs, missing data and that it also enables the model to handle heterogeneous non-Gaussian observation models.